Dieses Jahr nahm ich zum ersten Mal (mit meinen Kollegen) an der BASTA teil. Remote, weil ich dafür nicht nach Mainz fahren wollte. Und auch nur zwei Tage: ein Session-Tag (Dienstag) und ein Workshop-Tag (Freitag). Hier ein paar Notizen zu Dienstag…

Keynote: Zurück in die Zukunft: AI-driven Software Development

Präsentation von Jörg Neumann und Neno Loje (Link zu Video auf entwickler.de)

Die Keynote hat mir erstaunlich gut gefallen. Wahrscheinlich, weil ich bisher noch keine Künstliche Intelligenz beim Arbeiten genutzt habe, das Thema spannend ist, sich viel verändert, und ich es sicherlich in Zukunft einsetzen werde.

allgemein: Codegenerierung via ChatGPT, AI auf eigenen Daten trainieren: https://meetcody.ai/

Integration von KI in Entwicklung: Cody, Github Copilot (Plugins in VS Code, VS, JetBrains IDE)

- Code schreiben lassen (anhand von Kommentaren)

- Code analysieren lassen

- Code anpassen / verbessern lassen

- UnitTests generieren lassen

Azure: eigenes ChatGPT aufsetzen

theforgeai.com: mehrere KIs miteinander kombinieren und Abläufe definieren (verschiedene Rollen - wie im agile team)

Fazit: Ich denke, dass KI uns helfen kann: Wir müssten Szenarien identifizieren, wo KI uns helfen kann, und es dann dort ausprobieren. Daten- und Code-Sicherheit muss natürlich gegeben sein. Auch das Know-How um die Anpassungen / Erstellungen der KI zu analysieren, zu verbessern.

Wir sind (zu) wenige Leute in unserer Firma: KI kann uns Arbeit abnehmen. Dadurch können wir uns auf andere wichtige Sachen konzentrieren.

Sessions

1. Backends ausfallsicher gestalten

Präsentation von Patrick Schnell

Definition Ausfallsicherheit

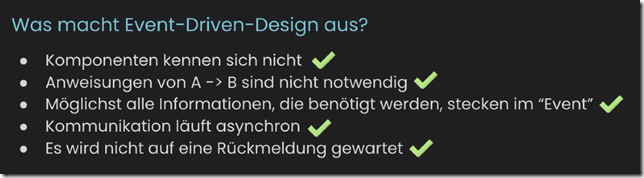

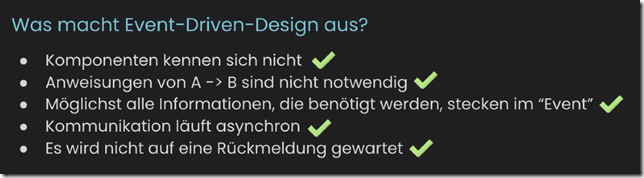

Event-Driven-Design

Module, die über http kommunizieren, entspricht nicht EDD

Event-Hub / Message-Queue

Beispiele: Redis (einfach), RabbitMQ, …

Stateful vs. Stateless: Vor- und Nachteile

Fazit: Nur an der Oberfläche gekratzt. Nicht viel was ich da mitnehmen konnte, oder was wir da nutzen können. Aber gut sich dieses Konzept mal wieder zu vergegenwärtigen.

2. Simple Ways to Make Webhook Security Better

Präsentation von Scott McAllister

webhooks.fyi: open source resource for webhook security

Webhook Provider – Webhook Message

why webhooks:

- simple protocol: http

- simple payload: JSON, XML

- tech stack agnostic

- share state between systems

- easy to test and mock

security issues: (listener does not know when a message will come through)

- interception

- impersonation

- modification / manipulation

- replay attacks

security measures:

- One Time Verification (example: Smartsheet: verifies that subscriber has control over their domain)

- Shared Secret

- Hash-based Message Authentication (HMAC)

- Asymmetric Keys

- Mutual TLS Authentication

- Dataless Notifications (listener only gets IDs, listener than has to make API call with authentication)

- Replay Prevention (concatenate timestamp to payload with timestamp validation)

TODOs for Providers: https, document all the things (show payload example, demonstrate verification, show end-to-end process)

TODOs for Listeners: check for hash, signature

Fazit: Ein guter Überblick über die potentiellen Schwachstellen. Gut dargestellt anhand eines github Webhooks. Da wir potentiell auch Webhooks bereitstellen (derzeit via "Dataless Notifications"), ist das gut im Hinterkopf zu behalten.

3. Leveraging Generative AI, Chatbots and Personalization to Improve Your Digital Experience

Präsentation von Petar Grigorov

What is the best way for humanity to live / survive on earth: empathy, kindness, sharing stories

AI in CMS

AI in Personalization

Fazit: Etwas langatmiger Vortrag, der zum Punkt zu kommen schien.

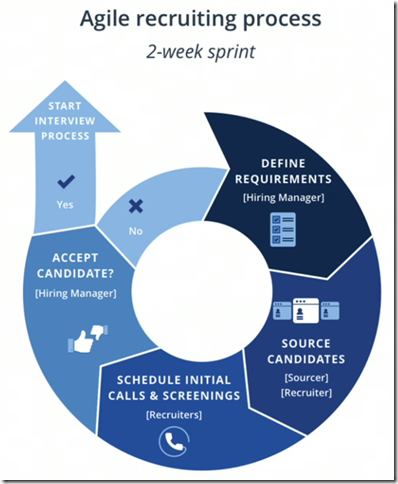

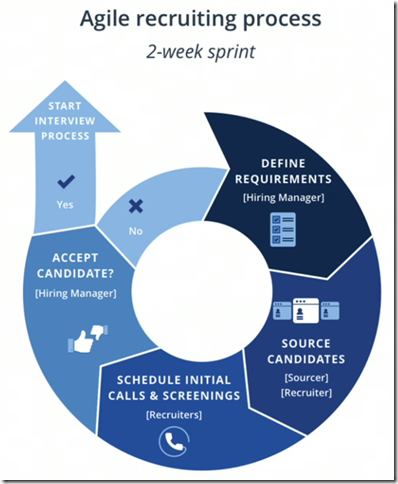

4. Agiles Recruitment und enttäuschte Erwartungshaltungen

Präsentation von Candide Bekono

Agiles Vorstellungsgespräch: verschiedene Personen im Einstellungsteam haben (Beurteilung aus unterschiedlichen Perspektiven), Authentizität

Feedback von Kandidaten und Einstellungsteam einholen: nach Gesprächen, warum möchte Kandidat nicht zum Unternehmen

Kandidatenzentrierter Ansatz: Anpassung der Rekrutierungserfahrung an Bedürfnisse, Präferenzen, Erwartungen der Kandidaten

Pipeline Management: Pflege zu potentiellen / ehemaligen Kandidatinnen (aufwendig)

Selektion: Definition wesentlicher Kriterien und die messen

warum agile Rekrutierungsprozesse: schneller lernen was funktioniert, ausprobieren (Anpassung Stellenanzeige), anpassen des Prozesses (requirements, sourcing, …)

Reflektierung der Kandidaten: Job Fit, Unternehmenskultur

Erwartungen der Arbeitgeber:

- Fähigkeiten, Erfahrungen

- Cultural Fit

- Kommunikation, Reaktionszeit

- Interesse und Begeisterung der Kandidaten

- Langfristiges Engagement

Fazit: Beim Einstellungsprozess kürzere Feedbackzyklen anstreben, Dinge ausprobieren, auswerten, anpassen (agile). Präsentator betonte auch immer wieder, dass das Auswerten nicht vernachlässigt werden darf (aber oft wird – wie bei uns).

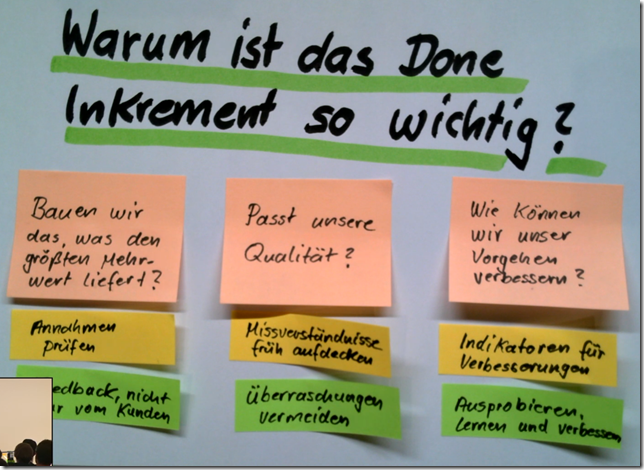

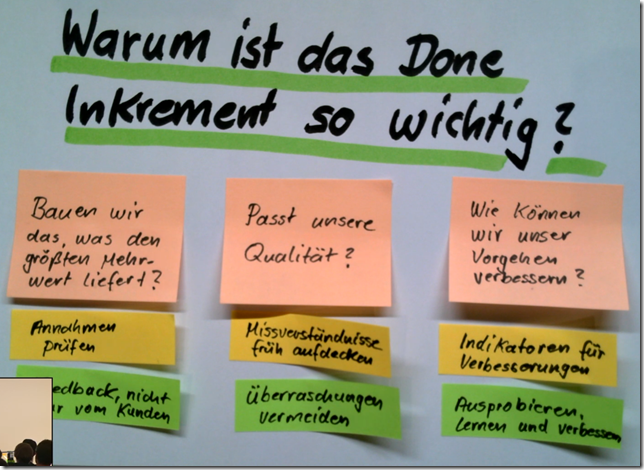

5. Done oder nicht done? Strategien für auslieferfähige Inkremente in jedem Sprint

Präsentation von Thomas Schissler

warum Done-Inkremente: Unsicherheiten minimieren, Blindflugzeiten reduzieren

-----

Bauen wir das, was den größten Mehrwert liefert?

Annahmen überprüfen

Feedback – nicht nur von Kunden (technisches Feedback von Entwicklern)

-----

Passt unsere Qualität?

Missverständnisse früh aufdecken

Überraschungen vermeiden

-----

Wie können wir unser Vorgehen verbessern?

Indikatoren für Verbesserungen

Ausprobieren, Lernen, Verbessern

-----

Vorgehen:

- Durchstich definieren

- Abnahmekriterien definieren

- Sonderfälle erstmal weglassen

Swarming: alle Entwickler an einem Projekt, statt an verschiedenen – damit zumindest einige Projekte innerhalb des Sprints fertig sind

Nebeneffekte von Swarming: daily scrum, sprint planning nicht mehr aneinander vorbei / nebeneinander (mehr Interesse da, weil alle am selben Projekt arbeiten)

Continuous Integration: Branches sollten eigentlich nicht alt werden – da möglichst innerhalb eines Sprints in development reingemergt (siehe auch feature toggles statt branches)

Continuous Testing: nicht erst nach dem Entwickeln testen, sondern schon parallel

Leidenschaft für das Produkt kann durch Done-Inkremente gesteigert werden

Definition of Done: einstellbar durch Developer, immer wieder anpassen (für nächsten Sprint) um bestehende Probleme (nicht auslieferbar, ausbremsende Dinge) zu vermeiden

Fazit: Ein mitreißender Vortrag (vor allem wegen des Vortragenden) über Themen, die wir auch mehr in unseren Alltag integrieren können.