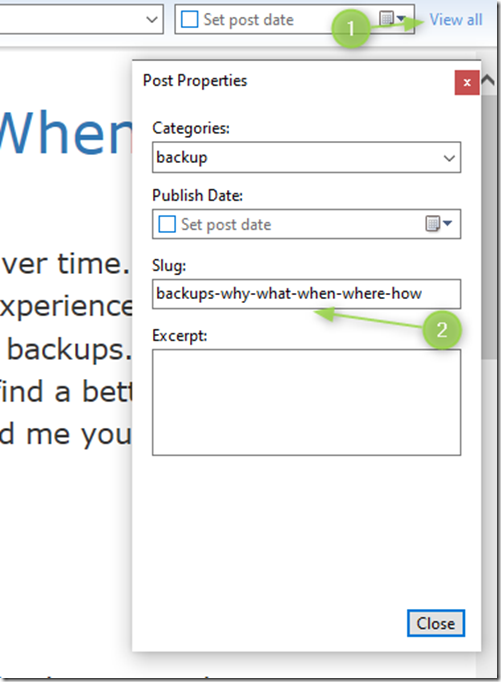

Backups: Why? What? When? Where? How?

- Posted in:

- backup

(This is a blog post in progress. It will change over time. For now it is just an amalgamation of my current thoughts and experiences. I have not yet bothered to read dedicated literature regarding backups. I probably forgot some things I already know. You will probably find a better write-up on backups somewhere else. If you do, please send me your source :). I mean it.)

Why backups?

There are a myriad of reasons why you should back up your data:

- disk failure

- disk sectors not readable anymore

- whole disk not readable due to driver error or similar

- external physical force destroyed disk

- accidental erasure

- accidental change of data

- loss of device with data

- fire or other external hazards

- theft

- accidentally leaving device somewhere unrecoverable

- loss of access to a service where your data resides

- service looks you out of your account because of some dispute or because service is down for good

- no access to password, email account, etc. to access service

It all depends on your personal preferences and how tragic a loss of data would be for you. This might be memories (photos, diaries), time investment (documents, programming code, access to password manager), …

What to back up?

In essence the answer is: everything you want to keep and cannot already restore via some method from somewhere else. Here are a few pointers:

- documents

- programming code

- databases

- hosted web pages

- invoices

- emails

- calendars

- bookmarks

- software / games downloads (if bought)

- software configurations (it can take quite some time to re-configure software so it behaves like with an old installation)

- pictures

- videos

- audio files

- data to access your password manager

- license keys (for software)

When to back up?

As often as possible, but as rarely so that the process does not hinder your "normal" activities. It is a tradeoff between how much data are you willing to lose vs. how much time, money and energy do you want to invest in backups.

Where to back up to?

It depends on your personal taste for safety and data access. You can backup your data to an external storage (for instance an external SSD) – external referring to outside your device(s). You could keep that external storage at home, but it could become subject to a fire or to theft along with your devices. So keeping the backup storage in a separate location from the devices is usually good practice, for instance at a friends house.

You can backup your data to the cloud / personal server. Be sure to check your access to the external service / server regularly.

How to back up and restore?

This depends on what you want to achieve. Here are a few scenarios:

Disk failure:

- restore last backup image of old disk on new disk

- restore data from last backup on new disk (possibly install operating system and software anew)

Accidentally deleted / overwritten data since last backup:

- restore single files from last backup

- revert files to a previous state (if using repository-like backup)

Accidentally deleted / overwritten data before last backup:

- restore files from an older backups than the accident

- restore files from incremental backups from the time before accident happened

- revert files to a state before the accident (if using repository-like backup)

Loss of access to service with data:

- backup data via an export option in the service (choose the appropriate contents and format)

- find a service (or local software) where you can import the backup you made from the service to which you lost access

Loss of device with data:

- get new / used device, install operating system and software anew, restore data from last backup

Backup Tools:

Automate as much as you can – if not you will more prone to procrastinate your backups. There are many backup tools out there.

disk image: Clonezilla

data backup from one disk to another (external) disk: SyncBack

upload backups to AWS: JungleDisk (now CyberFortress)

Do not forget to backup your backup configurations if you want to re-setup those services faster next time.

Restoring:

Check intermittently that you can restore the data you backed up. It can be very frustrating if you rely on a backup and it is not restorable or does not contain the data you expect.

BASTA! Herbst 2023 – Dienstag

- Posted in:

- BASTA

Dieses Jahr nahm ich zum ersten Mal (mit meinen Kollegen) an der BASTA teil. Remote, weil ich dafür nicht nach Mainz fahren wollte. Und auch nur zwei Tage: ein Session-Tag (Dienstag) und ein Workshop-Tag (Freitag). Hier ein paar Notizen zu Dienstag…

Keynote: Zurück in die Zukunft: AI-driven Software Development

Präsentation von Jörg Neumann und Neno Loje (Link zu Video auf entwickler.de)

Die Keynote hat mir erstaunlich gut gefallen. Wahrscheinlich, weil ich bisher noch keine Künstliche Intelligenz beim Arbeiten genutzt habe, das Thema spannend ist, sich viel verändert, und ich es sicherlich in Zukunft einsetzen werde.

allgemein: Codegenerierung via ChatGPT, AI auf eigenen Daten trainieren: https://meetcody.ai/

Integration von KI in Entwicklung: Cody, Github Copilot (Plugins in VS Code, VS, JetBrains IDE)

- Code schreiben lassen (anhand von Kommentaren)

- Code analysieren lassen

- Code anpassen / verbessern lassen

- UnitTests generieren lassen

Azure: eigenes ChatGPT aufsetzen

theforgeai.com: mehrere KIs miteinander kombinieren und Abläufe definieren (verschiedene Rollen - wie im agile team)

Fazit: Ich denke, dass KI uns helfen kann: Wir müssten Szenarien identifizieren, wo KI uns helfen kann, und es dann dort ausprobieren. Daten- und Code-Sicherheit muss natürlich gegeben sein. Auch das Know-How um die Anpassungen / Erstellungen der KI zu analysieren, zu verbessern.

Wir sind (zu) wenige Leute in unserer Firma: KI kann uns Arbeit abnehmen. Dadurch können wir uns auf andere wichtige Sachen konzentrieren.

Sessions

1. Backends ausfallsicher gestalten

Präsentation von Patrick Schnell

Definition Ausfallsicherheit

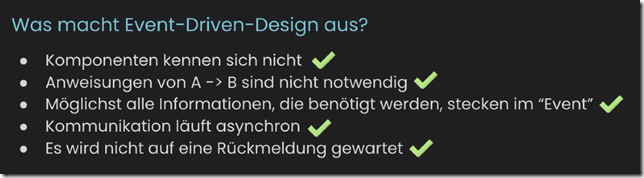

Event-Driven-Design

Module, die über http kommunizieren, entspricht nicht EDD

Event-Hub / Message-Queue

Beispiele: Redis (einfach), RabbitMQ, …

Stateful vs. Stateless: Vor- und Nachteile

Fazit: Nur an der Oberfläche gekratzt. Nicht viel was ich da mitnehmen konnte, oder was wir da nutzen können. Aber gut sich dieses Konzept mal wieder zu vergegenwärtigen.

2. Simple Ways to Make Webhook Security Better

Präsentation von Scott McAllister

webhooks.fyi: open source resource for webhook security

Webhook Provider – Webhook Message

why webhooks:

- simple protocol: http

- simple payload: JSON, XML

- tech stack agnostic

- share state between systems

- easy to test and mock

security issues: (listener does not know when a message will come through)

- interception

- impersonation

- modification / manipulation

- replay attacks

security measures:

- One Time Verification (example: Smartsheet: verifies that subscriber has control over their domain)

- Shared Secret

- Hash-based Message Authentication (HMAC)

- Asymmetric Keys

- Mutual TLS Authentication

- Dataless Notifications (listener only gets IDs, listener than has to make API call with authentication)

- Replay Prevention (concatenate timestamp to payload with timestamp validation)

TODOs for Providers: https, document all the things (show payload example, demonstrate verification, show end-to-end process)

TODOs for Listeners: check for hash, signature

Fazit: Ein guter Überblick über die potentiellen Schwachstellen. Gut dargestellt anhand eines github Webhooks. Da wir potentiell auch Webhooks bereitstellen (derzeit via "Dataless Notifications"), ist das gut im Hinterkopf zu behalten.

3. Leveraging Generative AI, Chatbots and Personalization to Improve Your Digital Experience

Präsentation von Petar Grigorov

What is the best way for humanity to live / survive on earth: empathy, kindness, sharing stories

AI in CMS

AI in Personalization

Fazit: Etwas langatmiger Vortrag, der zum Punkt zu kommen schien.

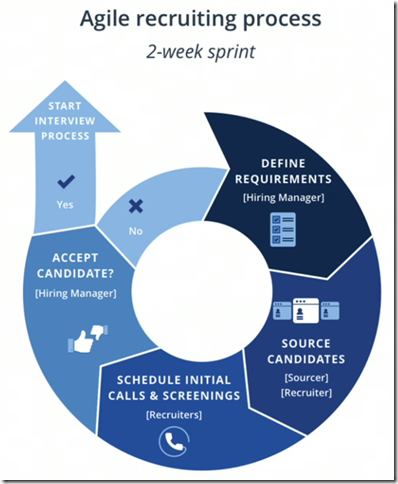

4. Agiles Recruitment und enttäuschte Erwartungshaltungen

Präsentation von Candide Bekono

Agiles Vorstellungsgespräch: verschiedene Personen im Einstellungsteam haben (Beurteilung aus unterschiedlichen Perspektiven), Authentizität

Feedback von Kandidaten und Einstellungsteam einholen: nach Gesprächen, warum möchte Kandidat nicht zum Unternehmen

Kandidatenzentrierter Ansatz: Anpassung der Rekrutierungserfahrung an Bedürfnisse, Präferenzen, Erwartungen der Kandidaten

Pipeline Management: Pflege zu potentiellen / ehemaligen Kandidatinnen (aufwendig)

Selektion: Definition wesentlicher Kriterien und die messen

warum agile Rekrutierungsprozesse: schneller lernen was funktioniert, ausprobieren (Anpassung Stellenanzeige), anpassen des Prozesses (requirements, sourcing, …)

Reflektierung der Kandidaten: Job Fit, Unternehmenskultur

Erwartungen der Arbeitgeber:

- Fähigkeiten, Erfahrungen

- Cultural Fit

- Kommunikation, Reaktionszeit

- Interesse und Begeisterung der Kandidaten

- Langfristiges Engagement

Fazit: Beim Einstellungsprozess kürzere Feedbackzyklen anstreben, Dinge ausprobieren, auswerten, anpassen (agile). Präsentator betonte auch immer wieder, dass das Auswerten nicht vernachlässigt werden darf (aber oft wird – wie bei uns).

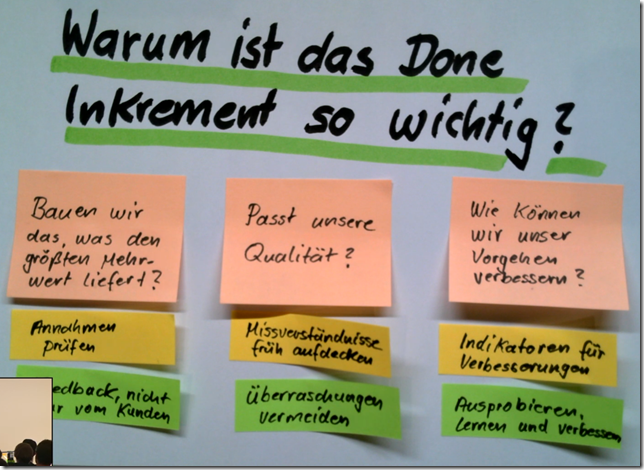

5. Done oder nicht done? Strategien für auslieferfähige Inkremente in jedem Sprint

Präsentation von Thomas Schissler

warum Done-Inkremente: Unsicherheiten minimieren, Blindflugzeiten reduzieren

-----

Bauen wir das, was den größten Mehrwert liefert?

Annahmen überprüfen

Feedback – nicht nur von Kunden (technisches Feedback von Entwicklern)

-----

Passt unsere Qualität?

Missverständnisse früh aufdecken

Überraschungen vermeiden

-----

Wie können wir unser Vorgehen verbessern?

Indikatoren für Verbesserungen

Ausprobieren, Lernen, Verbessern

-----

Vorgehen:

- Durchstich definieren

- Abnahmekriterien definieren

- Sonderfälle erstmal weglassen

Swarming: alle Entwickler an einem Projekt, statt an verschiedenen – damit zumindest einige Projekte innerhalb des Sprints fertig sind

Nebeneffekte von Swarming: daily scrum, sprint planning nicht mehr aneinander vorbei / nebeneinander (mehr Interesse da, weil alle am selben Projekt arbeiten)

Continuous Integration: Branches sollten eigentlich nicht alt werden – da möglichst innerhalb eines Sprints in development reingemergt (siehe auch feature toggles statt branches)

Continuous Testing: nicht erst nach dem Entwickeln testen, sondern schon parallel

Leidenschaft für das Produkt kann durch Done-Inkremente gesteigert werden

Definition of Done: einstellbar durch Developer, immer wieder anpassen (für nächsten Sprint) um bestehende Probleme (nicht auslieferbar, ausbremsende Dinge) zu vermeiden

Fazit: Ein mitreißender Vortrag (vor allem wegen des Vortragenden) über Themen, die wir auch mehr in unseren Alltag integrieren können.

A better way to set recurring reminders in Slack

the task:

Set a recurring reminder in the meetings-channel so that every week a different person (rotating through all the people in the company) has the duty of being meeting-master – with the name of the person who is meeting master that week in the message.

the obstacle:

Setting recurring reminders in Slack via the /remind is a pain. (I know the task is possible to set up with the vanilla /remind command, but it always takes me quite a while to figure out the right syntax after not using the command for a year.)

the occasion:

Every two weeks everyone at discoverize has the opportunity to use a few hours for somthing “funky”.

the path + the solution:

At first I thought I would program a .NET Core application which sends messages to Slack at the appriopriate times with the appropriate person as meeting-master. Then my toughts wandered to background tasks (which right now we also use in a different service). And I found Hangfire which would persist the tasks for me, making my life easier – on the downside of using a third-party app.

I already started to look into our Slack apps to create a new one (to follow this tutorial), but then realized that there probably already are Slack apps out there doing a better job of helping to create recurring reminders. And since my time was already dwindeling away, I just searched and compared apps. I landed on RemindUs, installed it to our Slack and started using it. It seems to do the job well enough. Task accomplished.

update:

After testing out RemindUs it did not suit our use case well. It did not convert a @user statement in a message to an alert to that user. Furthermore the layout and text was that of a reminder (of course) which felt weird. We just want a message to appear repeatedly as if it was just entered by a bot/user.

I researched other Slack Apps, but only paid ones did the thing I needed. And for this simple usage I do not want to pay a monthly amount of 6 Dollars or more.

So I reverted to vanilla Slack and the /remind command. Here is the usage in our use case:

/remind #_meetings "@anton - you are meeting master this week" on 08.05.2023 every five weeks

And then setting up this reminder for every team member in meeting master rotation one week apart.

Developer Open Space 2018

- Posted in:

- Developer Open Space

This year the Developer Open Space took place in a new location: Basislager Coworking. (Of course I have that space in my portal: https://coworking-spaces.info/basislager-coworking-leipzig) The atmosphere has improved, especially since we could use the rooms without having to close for the night between Friday and Sunday. The organization was top as every year – thanks Olga and Torsten. It was a pleasure to attend – I like to go there every year. What follows now are short notes and summaries:

Workshop: JavaScript, React and GraphQL

- we used Atomic Design to model our requirements

- Mike lectured us about GraphQL, we dove into Apollo GraphQL

- queries and mutations – separate components accordingly

- GraphQL simplifies data details in the queries (in comparison to REST services, for instance): you can specify what data exactly you want to retrieve without having to create a new service endpoint for that (in REST you need multiple end-points for different types and combination of types)

- GraphQL saves requests and data transfer, simplifies end-points

- many Links possible: decide which service takes care of which queries (client, state, http)

- Mikes library (see workshop branch)

- GraphQL hub and playground

- teamaton: We might use it for a side project, but it increases the complexity quite a bit (queries have to written and translated).

Session: Cypress

- cypress is a framework for frontend testing which can be part of continuous integration (CI –> TeamCity)

- is an alternative to Selenium (or coypu)

- can run on headless chrome or electron, can run locally

- LocalStorage in browser can be manipulated (for instance to simulate logged-in user), to separate testing concerns

- cypress provides snapshots for each test step

- build up a library example:

- define features in cucumber (usually with QA)

- reuse steps where possible

- translate steps into cypress language

- teamaton:We might want to write integration tests for important features and run them after each stage deployment.

Session: Time Tracking

- HourGlass plugin for RedMine: connection to US/Bug –> immediate labels, open source, mobile App, Git integration

- programmers do not really want to track time – only if needed, it needs to be simple

- managers want to have a Freeze Time: everything what came before that (in that project) cannot be changed anymore (has been transferred to an invoice)

- ManicTime: records which programs you have been working in – can be tagged afterwards

- Netresearch TimeTracker: JIRA synchronization (JIRA could be an alternative for us to Zendesk)

- teamaton: lemon: no example on marketing page (getlemon.com), so it cannot be shown very well (we had one in the olden times)

- teamaton: An integration with PivotalTracker does not seem reasonable: Starting US/Bug in PT results in starting record in lemon, record will be stopped as soon as US/Bug is finished in PT. Too many exceptions I can think of. Code review and testing would still need manual starts/stops in lemon.

Session: Key Performance Indicator

- formulate goals –> define success parameters –> measure (automated): predefine boundaries for “not good”, “good”, “very good”

- for instance “delight customers”: What are success parameters for teamaton? How can we measure them?

- ask customers what they want –> formulate goals out of feedback

- feature development: KPI: estimation vs. actual hours

- use KPIs for self reflection in team (for instance code issues per code line per person) –> celebrate successes

- teamaton: Do we have a need to make “delight customers” more measurable? I generally like the “ask customers what they want –> formulate goals out of feedback” – if we think about finding out what portal owners want.

Session: BenchmarkDotNet

- can be used after profiling to measure impact of changes in code

- BenchmarkDotNet should only be used on code fragment / function

- runs both code versions many, many times and compares results – can take some time

- Could it be used in Continuous Integration? Maybe for critical methods which need to be fast?

- teamaton: I think we do not need this.

Session: Azure, AWS, Google Cloud

- abstraction of hardware and OS – no need to worry about these, you get CDNs

- might cost more on the face of it, but you save admin costs, license costs, scaling costs (paying just what you are using)

- Azure functions: lightweight processes

- firebase alternative: heroku

- teamaton: In the long-term we probably need to be more flexible and reactive: adding resources if portals need more, move quickly from faulty instance (server) to another one. Oliver’s proposal to use containers might be a beginning in mid-term. Maybe using VMs to faster setup environments could also help.

Session: ASP.NET Core

- PageModel instead of Controller: controller and model in one

- .NET Core is generally faster than .NET Framework: less legacy, re-implemented from scratch

- teamaton: Use .NET Core for next new projects!

Session: How to Collect Requirements Well

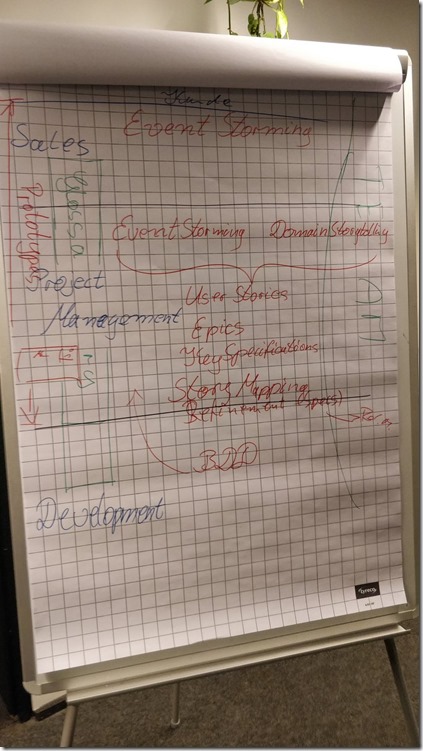

flow: event storming and/or domain storytelling (customer with sales person) results in User Stories (one US per event or action), structure USs in Epics, add key specifications (gherkin: who+what+why) to USs, use Story Mapping to plan releases, refine USs with Specifications

flow: event storming and/or domain storytelling (customer with sales person) results in User Stories (one US per event or action), structure USs in Epics, add key specifications (gherkin: who+what+why) to USs, use Story Mapping to plan releases, refine USs with Specifications- create prototypes (sketches) for fast feedback

- treat customer as part of the team: make sure team can contact customer with follow-up questions

- teamaton: Maybe use event storming to show customer discoverize and see where customers process overlaps with our software and where customer needs more features.

- teamaton: For more complex features we might want to use part of this flow: to better understand the needs of the customer, to address most questions beforehand, to make better architectural decisions, to implement features faster (because of better specifications).

Session: Nothing / Psychology

- be aware of the psychology of words: for instance “technical debt” – you might want to use a different term, like “software betterment”, “actually ok” – address the reservations

- men vs. women: there is a region “nothing” which only gets activated in men’s brains (in MRT): seems like men really can think about nothing if a subject does not fit a category (brain region), women jump from one category to another if something does not fit (I have requested more literature on this, because I could not believe that there is such a definite distinction between the sexes. Will read about that soon.)

- “do sometime later” – We should ask ourselves: When is “sometime later”? Is it never?

- How can you change the behavior of someone (for instance to use a clean code pattern)?

- invite to make new experiences

- be a good role model

- prepare the change well

- allow for as few obstacles as possible, so that that someone does not have to jump too high / change too much

- try to do a meeting like an Open Space

- teamaton: Do more DiscoTECH: might result in perceptive improvements, could lead to feeling of mastery (one of the key motivators for creative people: autonomy, mastery, purpose)

Session: JavaScript Frameworks

- vue.js

- has an extra GUI to create projects, add libraries, install packets, run server

- similar to react/redux (state mangement)

- HTML not inline, but in a template

- change-management: looks like magic, difficult to grasp for big sites (simpler in react on component level)

- relatively light-weight

- ag-grid: table infrastructure for multiple JS frameworks

- Angular:

- a bit unwieldy, large

- documentation not as fast as platform development

- is here to stay: used by Google and Microsoft for projects

- discordapp: many discussions about JS frameworks in forums, fast help with problems

Session: Team Organization

- playing :) cooperative game (street kitchen) to test out different communication strategies (silence, without preparation, with preparation, with feedback loop, specialized, one meal per player, …)

- best score when everyone has a specialization on one or two tasks, helping out the tasks in the chain before or after, with directions in-between the players

Session: Distributed Teams

- use digital white board when brainstorming or talking about features

- Visual Studio has live-sharing option: both people working on same machine, no need to merge

- working in different time zones / during different times: it’s just a matter of getting used to

- teamaton: information asymmetry due to people being in office or alone: tackle by posting a summary of discussions / infos in slack

- teamaton: get together more often (do not forget to repeat Brzezno)

Session: Hands-On Configuration of a Azure Function

- helping in a specific case: update information after every git commit to repository

Session: Objectives and Key Results

- objectives should be inspiring, motivating to help team outperform

- objectives should be challenging – therefore not often suitable for continuous development cycles (KPIs have been developed for product development)

- choose objectives so that they are achievable in 50% of the cases

- break down quarterly OKRs into 4-week priorities, and then into weekly priorities

- objectives could be: customer satisfaction, code health metrics like not too many bugs, team satisfaction, number of unit tests

- teamaton: Andréj already has looked into OKRs.

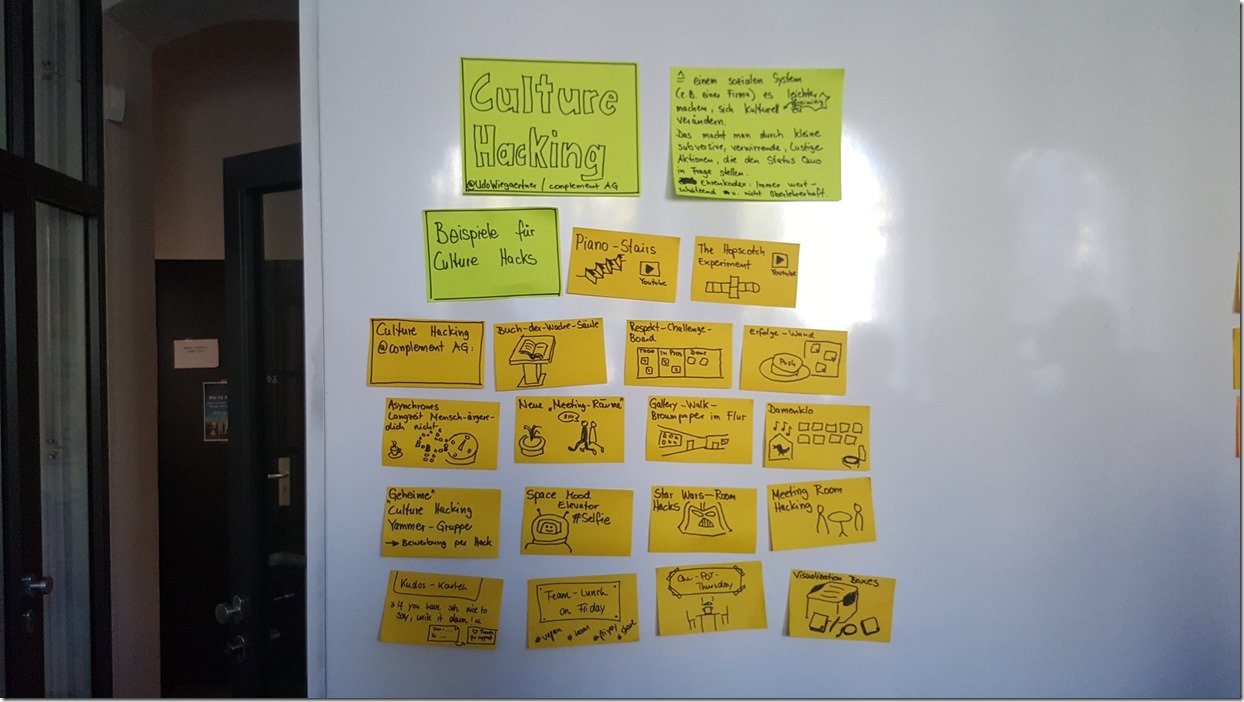

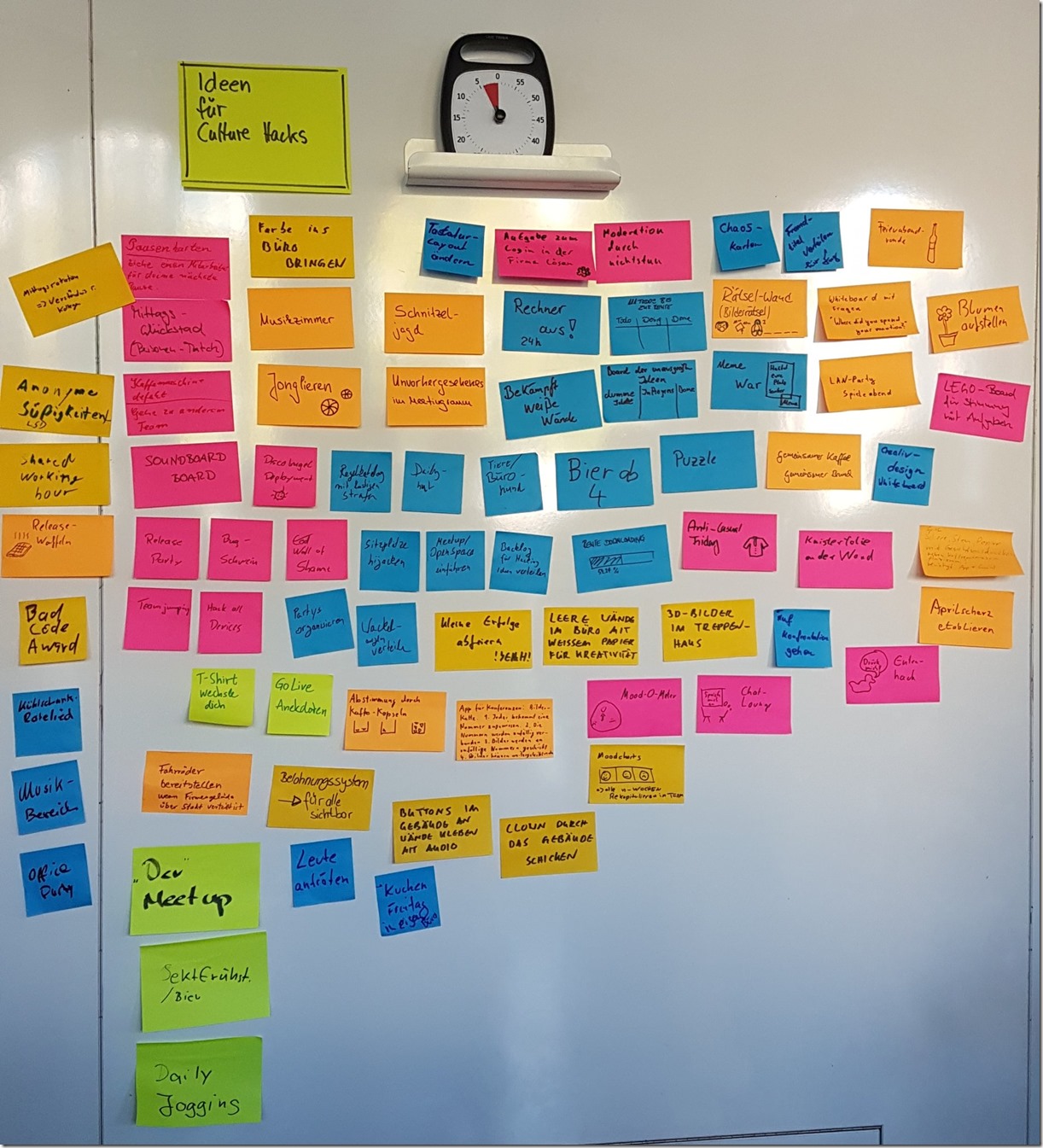

Session: Culture Hacks (did not attend)

- how to hack the culture of your firm :)

- in real life: https://www.youtube.com/watch?v=2lXh2n0aPyw